AI is transforming industries, offering extraordinary opportunities for efficiency, innovation, and growth. However, with great power comes great responsibility. AI systems, if not implemented and managed diligently, can expose organizations to risks ranging from biases and security vulnerabilities to ethical problems and compliance challenges. This is where AI risk assessment comes into play. They are vital for ensuring trust, transparency, and operational compliance in AI-driven processes.

This blog serves as your comprehensive guide to conducting an AI risk assessment, covering risks, frameworks, actionable steps, and best practices.

By the time you’re done reading, you will understand the importance of AI risk assessments and, most importantly, how to perform them effectively while keeping your organization protected from threats.

“Read our guide to The Impact of Artificial Intelligence in Cybersecurity to understand how AI is reshaping security strategies.“

What Are AI Risks?

AI risk assessments start with a good understanding of the types of risks you face. AI risks include a broad spectrum of areas, such as:

- Safety Risks: These include algorithm failures, flawed data input, or scenarios where the AI behaves in unpredictable ways.

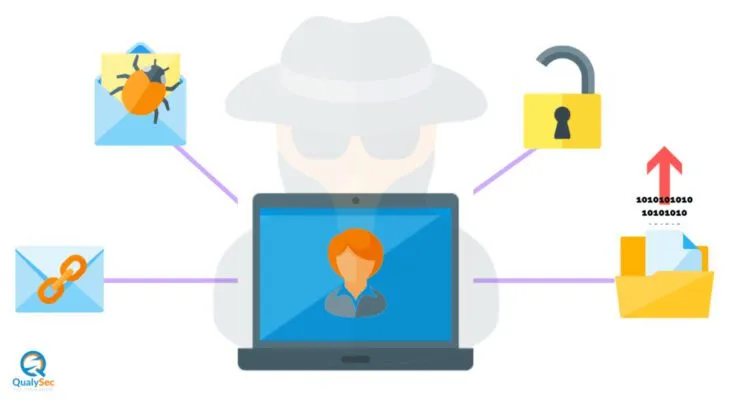

- Security Risks: Unauthorized access to AI systems, adversarial attacks, or algorithm manipulation.

- Legal and Ethical Risks: Issues stemming from biased algorithms, violating existing regulations, or unethical applications of AI technologies.

- Performance Risks: The inability of an AI system to meet its intended objectives, leading to reduced reliability or misuse.

- Sustainability Risks: Concerns related to the environmental impact of large computational processes required for AI systems.

“Security threats are a major concern in AI. Read our guide to AI Threat Intelligence in Cybersecurity to explore how AI-driven security solutions can help mitigate risks.“

Some real-world examples of AI risks include:

Certain facial recognition algorithms have shown higher error rates when identifying individuals from specific demographic backgrounds. Even AI-powered chatbots have memorized and repeated inappropriate language due to training on biased user data. There are autonomous vehicles and safety risks in which many self-driving cars have faced scrutiny after accidents attributed to failures in the underlying AI’s decision-making systems.

Frameworks and Standards for AI Risk Management

To manage AI risks effectively, organizations should adhere to established frameworks and standards. Here are some crucial ones to consider.

1. NIST AI Risk Management Framework

The National Institute of Standards and Technology (NIST) has developed a widely recommended framework, divided into four core functions, to help manage AI risks through the entire lifecycle of a system.

- Govern: Establish governance structures to oversee AI accountability.

- Map: Understand the landscape of AI risks in specific contexts.

- Measure: Analyze and assess risks with quantifiable metrics.

- Manage: Implement safeguards and solutions to mitigate risks.

This framework provides actionable steps for risk management, from conception to deployment and beyond.

“Also, read our guide to Cybersecurity Risk Assessment!

2. ISO/IEC 42001:2023

This International Standard is specifically designed for organizations implementing AI governance. It outlines key objectives such as transparency, bias reduction, and ensuring accountability across the lifecycle of an AI system. The standard also offers practical guidance on enforcing controls for better risk management.

3. EU AI Act

Recently passed legislation in the European Union provides detailed guidance for the governance of AI systems. It classifies AI applications as high-risk and low-risk types, with strict compliance requirements for high-risk systems. Businesses operating in or with the EU need to understand these regulations thoroughly.

“For AI-specific security assessments, read our guide to AI Penetration Testing and discover how ethical hacking can strengthen AI systems”.

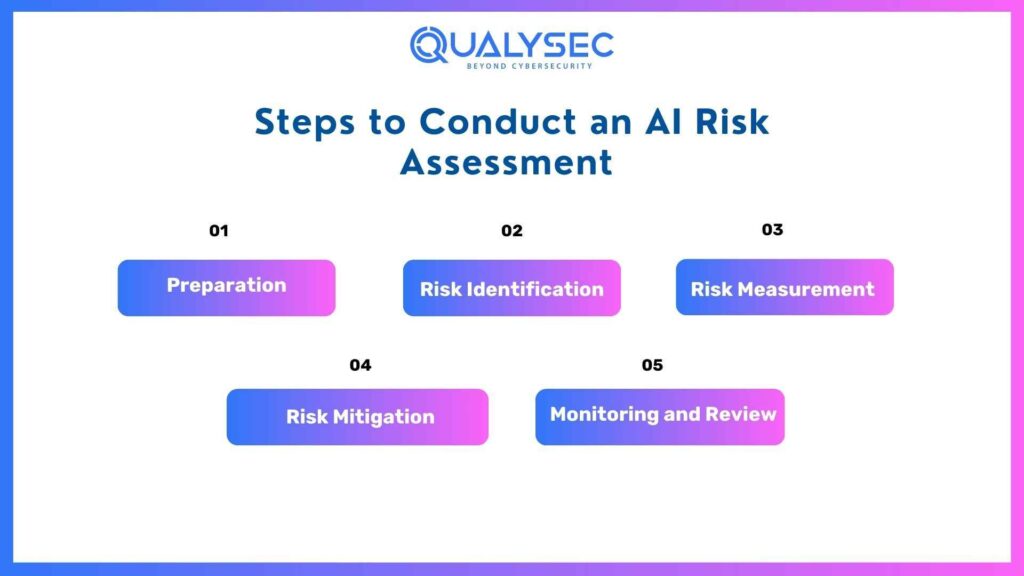

Steps to Conduct an AI Risk Assessment

1. Preparation

Before embarking on an AI risk assessment, adequate preparation ensures a structured and effective risk management process.

Establish an AI Governance Committee and Leadership Roles

The foundation of any successful AI risk assessment starts with a robust governance structure. Organizations should establish an AI Governance Committee made up of key stakeholders, including representatives from IT, legal, compliance, risk management, and business units. This group will oversee the assessment, set objectives, and ensure alignment with the organization’s strategic goals.

Additionally, define clear leadership roles within the committee. For instance:

- AI Risk Officer: Responsible for overseeing the risk assessment process and implementing findings.

- Data Privacy Lead: Ensures AI systems are aligned with data protection laws, such as GDPR or CCPA.

- Ethics Specialist: Focuses on ethical implications associated with AI usage.

Define the Scope and Objectives of the Assessment

Clearly outlining the scope and objectives streamlines the assessment process. Start by addressing the following questions:

- Scope: What AI systems or projects will the assessment cover? Will it include predictive models, machine learning algorithms, or Natural Language Processing (NLP) tools?

- Objectives: Are the goals focused on regulatory compliance, operational stability, or ethical considerations?

For example, you might aim to verify compliance with industry-specific regulations while minimizing operational disruptions from AI system errors.

“Consider exploring our advanced vulnerability assessment services.

2. Risk Identification

The next step is identifying the risks associated with your AI systems. This requires a thorough understanding of both systemic and specific challenges.

Map AI Systems and Their Potential Risks

Begin by creating an inventory of all AI systems within your organization. For each system, consider:

- Purpose: What problem does the AI solve?

- Functionality: What data does it use, and how does it process information?

- Stakeholders: Who relies on the AI outputs?

Once mapped, identify potential risks tied to these systems. Common AI risk categories include:

- Operational Risks: Errors or malfunctions that disrupt processes.

- Regulatory Risks: Non-compliance with data protection or AI-specific laws.

- Ethical Risks: Unintended bias, lack of transparency, or misuse of AI outputs.

Utilize Frameworks to Identify Specific Risk Categories

Streamline your identification process by leveraging established risk frameworks or standards. Popular frameworks include NIST’s AI Risk Management Framework or ISO/IEC 23894 guidelines. These frameworks provide structured methodologies for evaluating risks related to fairness, accountability, transparency, and security.

For example:

- Use the NIST framework to evaluate AI fairness and bias concerns.

- Apply the ISO guidance for evaluating cybersecurity vulnerabilities in machine learning systems.

3. Risk Measurement

Once risks have been identified, the next step involves evaluating their potential impact and likelihood.

Evaluate the Severity and Likelihood of Identified Risks

For each risk, classify its severity and likelihood, creating a risk matrix.

- Severity: Determine the potential consequences if the risk materializes. For instance, will it compromise customer data, damage brand reputation, or lead to financial losses?

- Likelihood: Assess how probable it is for the issue to occur based on historical data, system testing, or expert judgment.

For example:

- An AI system used for fraud detection may carry a high severity (financial losses) but low likelihood, given robust monitoring.

Leverage Tools and Metrics for Quantifying AI Risks

Several tools can help quantify AI risks effectively:

- Model Risk Management Platforms like SAS or DataRobot allow businesses to evaluate AI model reliability.

- Data Quality Tools assess the robustness and accuracy of the data being fed into AI systems.

- Fairness Metrics, like IBM’s AI Fairness 360, measure potential biases to ensure ethical outputs.

Measuring risks through concrete metrics provides a clearer understanding of your organization’s vulnerabilities.

4. Risk Mitigation

Armed with insights from measurement, it’s time to address and mitigate the identified risks.

Develop Strategies to Address and Mitigate Risks

Create a risk mitigation plan that aligns with your risk tolerance and organizational priorities. Strategies may include:

- Enhancing data security protocols to prevent breaches.

- Embedding bias-detection algorithms into machine learning models to ensure equity.

- Setting up fallback systems to minimize disruptions if AI systems fail.

Implement Controls and Safeguards

Once mitigation strategies are defined, it’s crucial to implement proper controls and safeguards to minimize impact:

- Access Control: Restrict access to sensitive AI algorithms and datasets.

- Regular Audits: Automate auditing processes to ensure compliance with industry standards.

- Fail-Safe Mechanisms: Build redundancy into critical systems to avoid total operational downtime.

For example, installing robust encryption protocols safeguards data privacy, while periodic model recalibration reduces unforeseen errors.

“Read our guide to AI/ML Penetration Testing to see how penetration testing can help secure machine learning models against adversarial attacks.“

5. Monitoring and Review

An effective AI risk management process requires continuous vigilance and adaptability.

Continuous Monitoring of AI Systems for Emerging Risks

AI systems are not static—they evolve as they process data and reformulate outputs. Continuously monitor them for emerging risks by:

- Using monitoring tools like AI observability platforms to track anomalies in system performance.

- Collecting feedback from end-users to identify practical risks, such as accuracy or interpretability issues.

Regular Updates to the Risk Management Plan

Revisit your risk management plan periodically to address new challenges and leverage lessons learned. Incorporate industry developments, regulatory shifts, and advancements in AI technologies. For instance:

- Review compliance protocols if new laws governing AI transparency are enacted.

- Update risk categories to account for the growing use of generative AI systems and their associated risks.

By maintaining an evolving plan, your organization will build resilience and agility in managing AI risks effectively.

Latest Penetration Testing Report

Preparing for the Future of AI Risk Management

Proactively conducting AI risk assessments isn’t just a recommendation; it’s becoming a necessity for organizations at all levels. Emerging trends, such as generative AI, explainable models, and focused legislation, mean organizations must remain watchful and adaptive in their AI governance strategies.

QualySec’s expertise in vulnerability assessment and penetration testing makes it a trusted partner for businesses ready to harness the full potential of AI while staying secure and compliant.

Don’t leave your business to chance; partner with the experts. Contact QualySec today to schedule a consultation or get started with a free assessment.

Talk to our Cybersecurity Expert to discuss your specific needs and how we can help your business.

FAQs

1. What are the biggest risks of AI?

Bias, security threats, and lack of transparency. AI can be unfair, get hacked, or make decisions no one understands.

2. How do you assess AI risks?

Check data quality, security, fairness, and reliability. Make sure AI works as expected and follows rules.

3. How can AI risks be reduced?

Use strong security, test for bias, keep AI explainable, and monitor its behavior regularly.

4. Who handles AI risks in a company?

It’s a team effort – tech teams, legal, leadership, and ethics experts all play a role.

![Top 20 Network Security Companies in USA [2025 Updated List]](https://qualysec.com/wp-content/uploads/2025/05/Top-20-Network-Security-Companies-in-USA-2025-Updated-List-scaled.jpg)

0 Comments